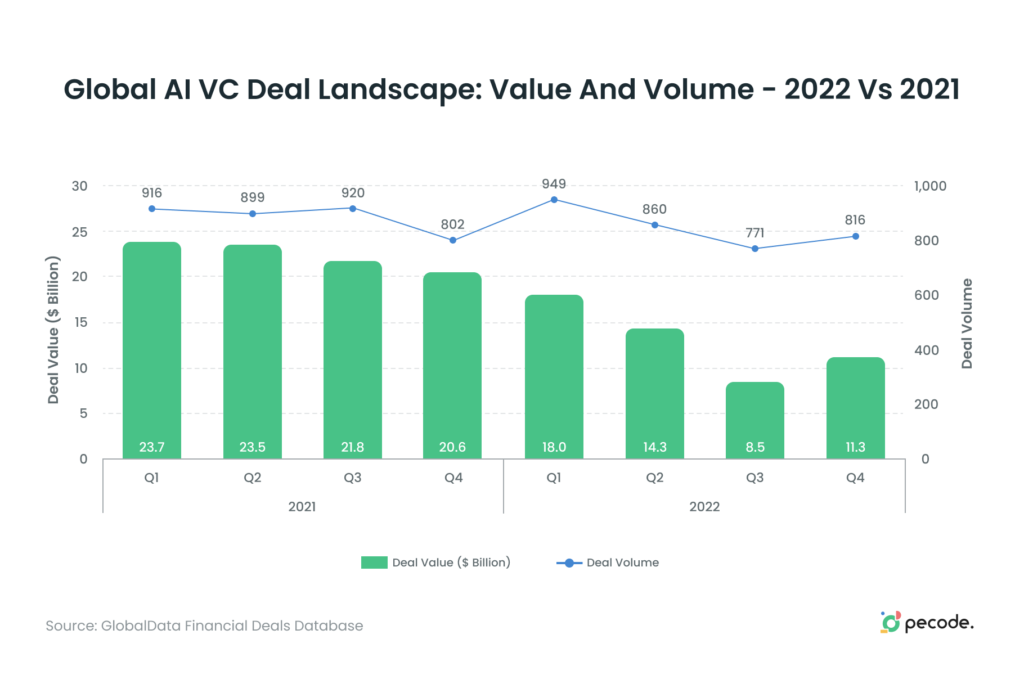

52.1 billion USD

That’s the amount of funding 3,198 AI startups gained from VC deals in 2022. After the ChatGPT launch and AI products boom in 2022, investors see a big deal in being a part of the newcomer industry.

The rapid growth of funding for AI-based products brings lots of opportunities for startups focused on merging AI with ordinary processes. Except for fully AI-based projects, the companies that implement AI into existing products get a great boost in funding, too.

However, an environment with such huge velocity hides its own pitfalls and dangers. When the growth is quick and unexpected, there is a risk of losing the product’s main aspect — quality.

The euphoria caused by funding growth shifts the focus from maintaining flawless app work to exploring new features and fueling new marketing campaigns. That may cause in a big challenge for startups to save the quality of their app on the acceptable level. In this case, the QA is a must-have for every startup in this industry.

Still, how do you handle a quality assurance testing for AI products? Surely, the complexity of these apps brings more challenges and uncertainties in the regular QA practices. Thankfully, we already know what are the key things to focus in testing of AI products.

Now, let’s get into the details.

.

The basics of Quality Assurance

When it comes to Quality Assurance, there are two main approaches: manual and automated. In order to choose the best approach for your project, it’s important to understand the differences between them.

Manual QA involves human testers examining the software product to identify defects and inconsistencies and to ensure that it meets all requirements. This includes running various tests and checks such as functional, regression, usability, and exploratory testing. Manual QA is flexible and can adapt to changing requirements and unforeseen scenarios. It can also catch issues that automated tests may miss, such as visual and usability defects. However, manual testing takes more time, requires skilled testers, and can be prone to human error.

On the other hand, automated QA involves running pre-scripted tests using specialized software tools. This approach allows for faster and more efficient testing with tests being run automatically. Automated testing is useful for repetitive tasks and large-scale testing. However, it may miss certain types of defects that require human observation, and it can be more difficult to adapt to changing requirements. It’s important to weigh the pros and cons of both approaches to determine which is most suitable for your project.

There is also a General QA approach, which is basically a mix of manual and automated QA. That’s especially good for enterprise companies that develop complex software and need to go through both manual and automated scenarios.

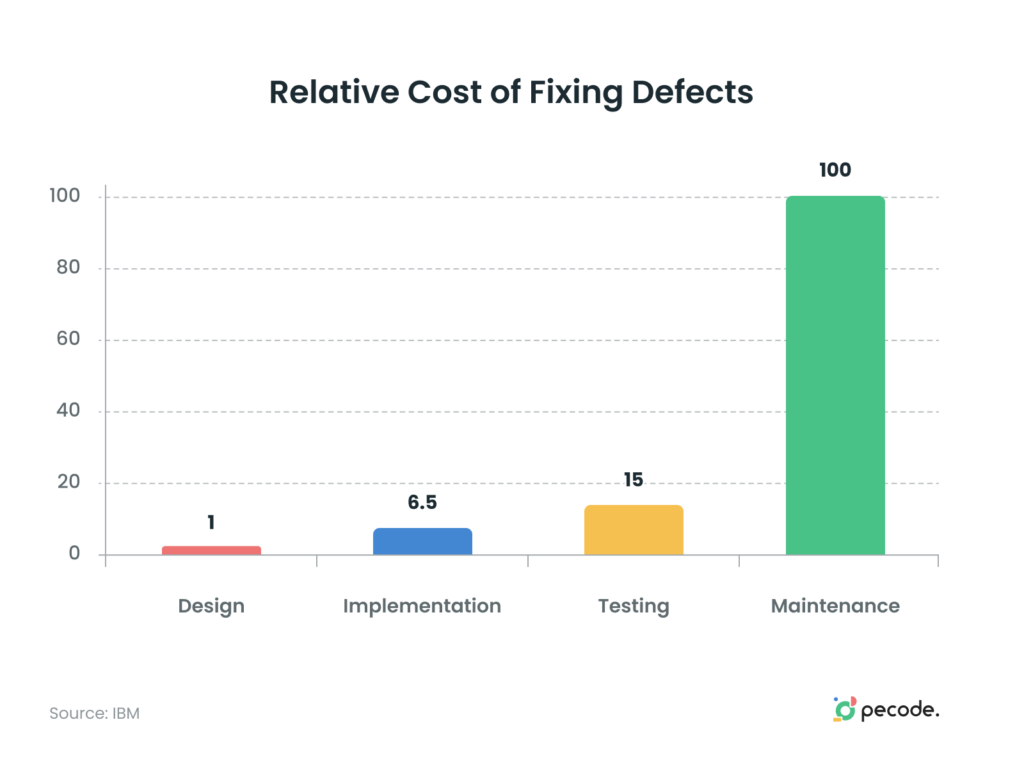

It’s important to understand that the earlier you implement QA, the more resources you will save during the whole development process. IBM found that the cost of fixing defects is 15 times higher in the production phase than during the requirements phase and that defects found during production are 100 times more expensive to fix than those found during the design phase.

How Quality Assurance Works with AI

In ensuring the quality of AI products, the quality of the model and the data are crucial. The quality of the model and the quality of the data are widely discussed as inherent techniques in the fields of statistics and machine learning. However, in quality assurance, practical aspects such as large and real data, as well as online learning, must also be considered. Additionally, it’s essential to acknowledge that even in mission-critical areas, the model’s accuracy may not be 100%.

Another concern is customers who may not fully understand AI product characteristics. Whether the development is deductive or inductive, if the expectations are high, the quality assurance must be more robust. Additionally, if the customer lacks a good understanding of the product, communication, and education are necessary.

Regarding the complexity of AI-based products, except for having regular product testing needs, you must also consider the 5 axes of Quality Assurance in AI:

- Data integrity

- Model robustness

- System Quality

- Process Agility

- Customer Expectations

Talking about the specific examples, the next paragraphs will be focused on specific issues regarding every abovementioned axis.

.

Data Integrity and Validation in AI

Continuous testing of training data is a basis of a well-working AI. Data is the most important part in the whole QA process. It’s validation and usability verification is a foundation of the entire production process.

For QA engineers, there are two significant things to work on: the hyperparameter configuration data and the training data. The configuration data is usually tested using validation methods like cross-validation. All AI projects should use these methods to make sure the configuration is correct. It’s just standard practice.

The vast amount of data testing happens during the training stage of AI development. It involves a list of requirements that need to be checked and assured:

- The amount of data must is sufficient and the cost must be appropriate.

- The statistical properties of the sample are met.

- Training data and validation data should be independent.

- The sample contains the necessary elements appropriately.

- The mechanism to ensure independence and confidentiality established.

- The legal and ethical aspects considered.

When it comes to production part, the data testing routine transforms into constant assuring that AI performs well after receiving new real data.

.

Model Validation and Robustness

Model Robustness is a crucial aspect of quality assurance in machine learning. It refers to the accuracy and reliability of the model over time, and how well it can handle degrading or changing data. Like Data Integrity, Model Robustness has been widely discussed as an inherent technology in the fields of statistics and machine learning.

To ensure Model Robustness, we must consider several factors. First, we need to evaluate the accuracy of the model, which includes metrics like correct answer rate, recall rate, and F-value. Generalization performance and AUROC (Area Under Receiver Operating Characteristic) are also important indicators of the model’s quality. We need to validate the model frequently and ensure it’s not affected by noise. This involves cross-validating the model with diverse data that includes semantic, social, and cultural aspects.

Also, we must address issues like model degradation and obsolescence during training and operation. As the learning progresses, we need to evaluate whether the algorithm is appropriate and whether the hyperparameters are optimal. We must also consider whether the model has fallen into a local optimum or not.

Finally, it’s important to consider whether the model will become obsolete during the mid-to-long-term operation process. To ensure long-term robustness, we need to develop strategies to handle changes in data and improve the model’s performance.

.

AI System Quality

In System Quality, QA experts consider the quality of the entire AI product.

First, it is essential to determine if the system is providing value appropriately. The meaning of value depends on the system, domain, and business model. For AI products, there is a need to iteratively examine how the value of AI products perceived.

AI products consist of deductive and inductive development. We can divide and conquer the former, but not the latter. The difference must be identified.

Quality incidents refer to fatal quality degradation, its effects, and the entire event including both. We need to control the fatalities of quality incidents to an acceptable level. Quality incidents can cause economic damage, impact society and the environment, cause discomfort, be unattractive, lack meaning, or be unethical.

For AI products, we need to consider not only functional quality incidents such as misclassification, but also whether the behavior of the entire system will deteriorate. When considering quality incidents, we need to fully consider the triggering events. Triggering events can happen inside or outside the AI product, and we need to examine their frequency of occurrence. We also need to consider how much damage can be reduced and the protective mechanisms, safety functions, and attack resistance in place.

The structure of AI components and non-AI components must also be take into account. We also need to think about how quickly and appropriately changes in both AI and non-AI components can be reflected, and whether the impact of failures can be kept low enough.

.

Process Agility

Process Agility means being flexible and using automated tools to develop high-quality AI products. To do this, the development process must be flexible and automated, and the team must work together.

Data collection needs to be fast, and development cycles must be short. Developers must quickly fix any issues that arise and be able to add new features easily. Rollback options should be available in case of problems, and releases must be evaluated and tested carefully.

The team should have skilled people in data science, machine learning, software development, and the specific domain. They should learn from their experiences and reflect on their progress. It is important to balance what is known and what is unknown, and to consider both technical and human factors in the development process.

.

Customer Expectation

To begin with, when customers have high expectations in a positive sense, quality assurance must be executed with precision. AI products that can potentially harm customers’ belongings or physical well-being during quality accidents, such as automated driving or financial transactions, are held to a higher standard than those that are harmless, such as free hobby products. This applies regardless of whether the development process is inductive or deductive.

On the other hand, if customers have negative expectations, meaning they lack an understanding of AI products’ characteristics, quality assurance becomes a challenging task. These customers may falsely believe that the product and development team will always function flawlessly without requiring any specific input from them. However, quality assurance teams, along with sales and development, must manage customer expectations appropriately by enhancing their comprehension of the product.

Therefore, quality assurance must strive to establish a culture, environment, and working style that empathizes with customers’ conviction and enables decision-making within a suitable jurisdiction. AI product quality assurance teams must exceed the narrow limits of deductive development and take all necessary actions to ensure quality while also acknowledging all stakeholders.

.

Conclusion

Quality assurance is not an option when it comes to AI products. It is a necessity that can make or break the growth of an app.

Customers have high expectations, and failure to meet those expectations can lead to disastrous consequences. Whether it’s automated driving or financial transactions, the stakes are high, and the potential for damage is significant.

The role of QA engineers, teams, and organizations is vital in ensuring that the product meets the customer’s expectations and delivers the intended benefits. It is also important to control customer expectations by educating them on the characteristics of the AI product.

We hope that the tips we provided you with in this article will help you to create a flawless user experience. In Pecode, we’re always ready to assist you with your needs in quality assurance for AI products and projects in many other industries. Contact us via hello@pecodesoftware.com to polish your products to perfection.